Performance Measurement

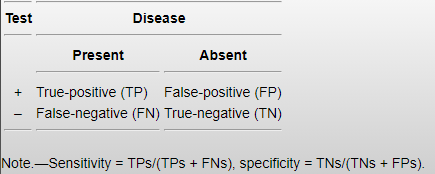

The basic measures of test accuracy [providing the outcome is binary; that is pathology either present or absent] are sensitivity and specificity.

A crude figure for overall performance, in the context of image reporting, may be calculated by adding the number of true positive reports to the number of true negative reports and dividing by the total number of reports. This assumes that the ground truth (or reference standard) is known; in reporting practice the reference standard is expert opinion, generally from a consultant radiologist, or more definitive follow up testing such as cross-sectional imaging.

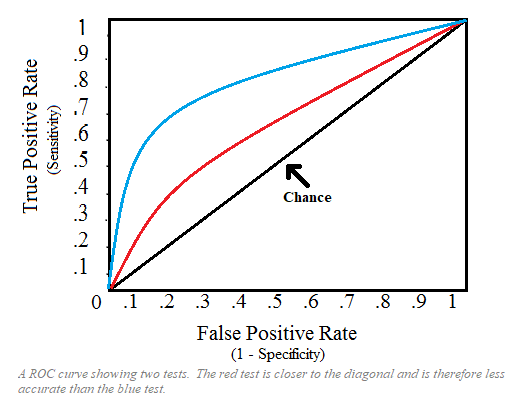

Radiographs are infrequently so helpfully dichotomous in their outcomes, instead the report may only offer a likelihood of a pathology being present or absent; it is therefore useful when measuring performance - particularly between a group of reporters or a single reporter over time - to include an index of confidence, thus providing a measurable continuous outcome from a report rendered dichotomous out of clinical necessity. When assessing the accuracy of a test which may have a continuous outcome, a receiver operator characteristic (ROC) curve may be plotted with the sensitivity and specificity of each interval of confidence visualised.

Measuring the area under the ROC curve (AUC) gives a rough estimate of the diagnostic value of the test being visualised in its entirety; a greater AUC is more desirable, an AUC of 50% is equal to random chance. If we wish only to measure and compare the diagnostic value of the test at certain cut-off ranges then we may measure only a partial AUC (Obuchowski 2005). If sensitivity and specificity are known, further statistical analysis may be applied to produce performance measuring data such as positive and negative predictive value and likelihood ratio positive and negative. Outside of the research setting and for the purpose of RR audit within clinical setting, however, sensitivity and specificity are generally sufficient.

Standards of performance

The law requires that those who are entitled by an employer to perform clinical evaluation are trained and competent to do so. While this obviously involves the reporter holding an appropriate post-graduate qualification, it also includes continuing participation in training, audit and continuous professional development following demonstration of an accuracy level of 95% over an initial 6-month preceptorship period.

The Society of Radiographers acknowledges that “the performance standards to be achieved by radiographers undertaking preliminary clinical evaluation and clinical reporting are difficult to define in quantitative terms”. While the target standard of accuracy of 95% has been long accepted

, this figure is based on what can be achieved rather than a recommendation from a governing body on what should be acceptable. It seems to be a placatory standard set high to acknowledge the difference in preliminary education between radiographers and radiologists.The specific target accuracy is left to the discretion of the Clinical Lead of the entitling employer and fairly recent literature proposes that a target of 90% concordance is sufficient, a figure weakly supported by a 2016 paper finding a median sensitivity of 83.4% and specificity of 90% among radiologists. Given Brady’s discussion of inter-observer variability in reporting and findings by Buskov et al. that radiographers can outperform junior radiologists in terms of accuracy, a flexible concordance target seems reasonable with the caveat that all reporters constructively engage with peer learning from confirmed discrepancy.

Audit

Regardless of role, the Health and Care Professions Council (HCPC) requires that registrants ask for feedback and use it to improve their practice. Specific mechanics of auditing performance include random, prospective peer review - a specific percentage of cases are selected for second reading - or learning from discrepancy identified further down the clinical pathway – such as discussion at Radiology Events and Learnings Meetings (REALMS) - with either approach having variable value depending on the circumstance, practitioner engagement, case volume and whether a culture of blame or collaborative improvement exists. Woznita et al. suggest that a minimum of 5% of a reporter's cases should be peer reviewed at least monthly, however SCIN recommends that following the preceptorship period, progression to annual audit should follow with practitioners who fall below the agreed accuracy level having their learning needs addressed and being subject to monthly audit once more.

Feeding back

Anyone can feed back to a reporter and generally, reporters want feedback; if (after doing some reading and convincing yourself that you’re genuinely on to something) you suspect that a study has been misinterpreted or are concerned that something has been missed, get in touch with the person who wrote the report and (politely) highlight your concern. A good touch is to frame our enquiry as a personal learning opportunity - “I was wondering about the report for patient X, I thought there might be Y but you’ve reported it as Z. Would you mind explaining why Y wasn’t correct?”

The worst that can happen is you either get ignored or rad-splained at, but you also might improve someone’s practice, if only your own.